[thx to Tom Igoe for making me write all this down]

Question: Can we teach people how to debug? We often talk about “the art of debugging”, but is it really an art? Is it a science? A mixture of both?

I’ve never given thought to explicitly teaching a unit on debugging, it’s always something that gets covered along the way while teaching coding and physical computing. Which isn’t surprising, I don’t remember anyone teaching any sort of debugging in any of my CS classes or ever thinking of it as an academic subject. It was mostly treated as a practical thing that we’d pick up after we got work. You get your first few coding jobs, you have bugs to fix, and someone senior (usually) comes by and teaches you what they know about finding bugs in software.

However, I think that where I really learned how to debug wasn’t while writing software, it was while working on cars and motorcycles. When you’re faced with a motor that won’t start or a strange noise or an intermittent failure in some part of the electrics, you have a problem space much bigger than you can see all at once. You have to break the problem down, rule out areas that can’t obviously be at fault, and try and focus on the areas where the problem might lie. There’s an iterative process of selecting smaller and smaller domains until you get to the point you can start asking questions that are simple enough to be easily answered. “Why won’t the car start?” can’t be answered until you’ve answered all the little questions, like “is the battery fully charged?” or “is the fuel pump sending fuel to the carbs?” or “are the spark plugs firing?”. More often than not, answering those questions simply leads to even more big questions that have to be broken down again, “ok, why isn’t power getting from the battery to the spark plugs?”

Another issue is that in the physical world, you don’t have x-ray vision (debuggers) or the ability to replicate the problem over and over without causing physical damage (“let’s add some printf’s and run it again”). You have to learn to break the problem down into tests that won’t damage the engine or make the problem worse. You also can’t magically make most of the car go away with #ifdef’s in order to isolate the problem, you have to physically connect and disconnect various things both for safety reasons and so that you can perform proper tests. (Always remove the ground strap from the negative pole of the battery. No, really. Trust me on this.)

When unit testing started gaining in popularity in the software dev world it made perfect sense to me. This is the way I fix a vehicle — break the problem down into increasingly smaller chunks, isolating unrelated systems from one another, to the point that I can “Has the flayrod gone askew on treadle, yes or no?” instead of being stuck at “there’s a problem at the mill”. Granted, we use these tests to prevent bugs in code under development, but adding them to an existing code base often reveals all sorts of, “oh, so that’s why it makes that odd noise on Tuesdays after lunch” bugs that were never worth tracking down. The problem with unit testing, however, is that you’re usually adding it from the bottom up while you’re actively focused on the task of software development. You’re almost ruling out the need for debugging later by doing extensive testing now, or you’re adding it to an existing code base to “increase stability,” as they say. (In other words, there are all sorts of mildly annoying bugs we can’t find easily, so let’s just add unit tests and hope that fixes things.)

My gut feeling is that there’s a pattern involved in debugging both hardware and software problems, and that the pattern is similar to other patterns we use in hacking and design. I believe “my car won’t start” and “my LED won’t light” can be solved using similar methodologies to generate domain-specific questions. Each domain requires its own set of knowledge and specialized tools, but I think the process for fixing each problem is similar.

So how do we teach debugging? I think we start by teaching the good ol’ scientific method, translated to computational space:

- Have you solved this problem before? If so, repeat your previous investigation to see if it’s happened again.

- Define questions that you can answer with simple tests.

- Perform tests and collect data

- Analyze results.

- Repeat 1-4 until problem is solved.

Ok, granted, that seems pretty obvious, but it took us humans a really long time to sort that out and write it down. I suspect most of us couldn’t recite the scientific method off the top of our heads, especially if we didn’t study science in high school or college.

Using these steps also requires a certain amount of domain specific knowledge and ability to choose and use the appropriate tools. If I don’t know ground from positive and that current can only flow through an LED one way, I’m not going to get very far figuring out why my LED won’t turn on because I’ll never be able to ask the correct questions.

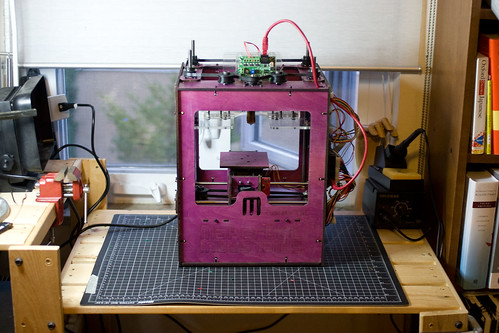

In theory, what we’re teaching when we teach computing is the necessary domain specific knowledge and tools, so the student should be able to debug simple problems in their own work. What I’m suggesting is that we also hammer on the above methodology any time a student shows up saying, “my LED won’t turn on”. Make the student break the problem down, generate the questions, then show the student how to answer those questions if they’re unable to do so on their own. If the student can’t ask the right questions, then there’s a bigger problem: the student doesn’t have the domain specific knowledge to turn the LED on in the first place. If the student can ask, “is there power to the LED” then you can show them how to use a multimeter to answer that question. But if they’re still unclear on polarity, then they’ve got a bigger problem.

I think we can teach debugging, even though we weren’t taught debugging when we were learning. Not only can we, I think we do students a disservice if we don’t teach debugging as a core competency.

Footnotes: “Kit-building isn’t learning” and “It’s not my fault that Windows sucks”.

There are at least two exceptions to the above.

1) Building a kit isn’t the same as studying and learning a subject. Knowing only how to solder and read English, one can put together kits ranging from a simple Adafruit Arduino kit to a complex synthesizer from PAiA. But unless the builder does research into why the kit is put together the way it is, they haven’t learned much, if anything, about what they’ve built. As a result if there is a problem, whether it is in the instructions, the components, or their assembly, they’re going to find it very difficult to ask the right questions that will let them debug the problem. (I’ve assembled kits from both outfits and they both provide excellent kit-building instructions.)

2) Windows sucks. Ok, all computers suck, but Microsoft has spent decades innovating new and wonderful ways for Windows to suck, so I’m picking on them. There comes a point in debugging a hardware or software problem where the problem is out of operational scope. Maybe “my LED won’t turn on” because there’s a subtle bug in the USB driver between the IDE and the breadboard. The student will probably never have the domain specific knowledge needed to start asking questions about the USB driver, and odds are the instructor won’t either. Sadly, even the person who developed the driver or tool or OS can’t formulate the right questions either, this is why the final step in many troubleshooting guides is, “reinstall the drivers and then the operating system”.

[tags]debugging, pedagogy[/tags]